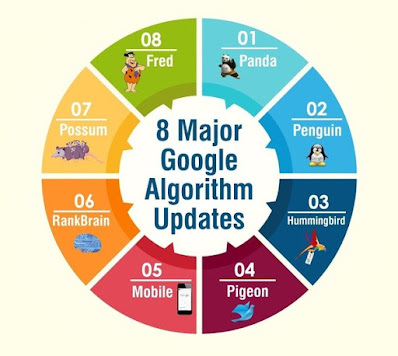

GOOGLE ALGORITHM UPDATES

In order to improve the quality of the search engine, Google made Algorithm updates as a solution to any kind of spammy techniques practiced by webmasters.

Google Panda Update-2011

Google imposed penalties in case of content spamming by webmasters. Let's look into the conditions of penalities. Content duplication, thin content, user-generated spam, and keyword stuffing are different cases of penalities.

- Content duplication: Content copied from other sites. The duplicate content issue can also happen on your website when you have multiple pages featuring the same text with little or no variation.

- Content farming: A large number of low-quality pages often aggregated from other websites.

- High Ad to Content Ratio: Pages that contain more paid ads rather than the original content.

- Thin content: Weak pages with very little relevant text and resources.

- Low-quality User-generated Content: Pages that are made of user-made posts that are full of grammatical or spelling errors, and lacking authoritative information.

Google Penguin Algorithm - 2012

The Google Penguin update targeted two specific practices: Manipulative link schemes, and keyword stuffing.

What triggers Google Penguin Algorithm?

- Paid link recommendations or purchasing backlinks from low-quality or unrelated websites to create an artificial picture of popularity and relevance.

- Exchange of backlinks in a mutual way to increase the rankings.

- Recommendations from low-quality spammy pages.

- Building links using artificial methods or automated mechanisms.

- Hacking websites and getting backlinks from them.

- Adding backlinks through unwanted or unrelated comments,.

- Adding backlinks through pages that are editable by the user eg. Wikipedia is a link scheme practiced by webmasters to achieve high rankings.

In 2016, the Penguin algorithm was made realtime. So, changes will be visible much faster, shortly after google recrawl and reindex the pages.

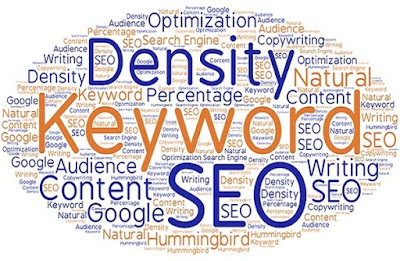

Google Humming Bird Algorithm Update - 2013

Hummingbird has been cited as a major overhaul to Google's Core Algorithm rater than Add-ons like Panda or Penguin updates.

- It changed the working method of search engines by focussing on synonyms and theme-related topics.

- As Hummingbird used context and intend to deliver results that matched the needs of the user, local results become more precise.

- It allowed the users to confidently search for topics and subtopics.

- Hummingbird helped Google to give semantic search results to the user i.e. the concept of improving search results by focusing on the intend of the users, and how the subject of the search relates to other information in a wider sense.

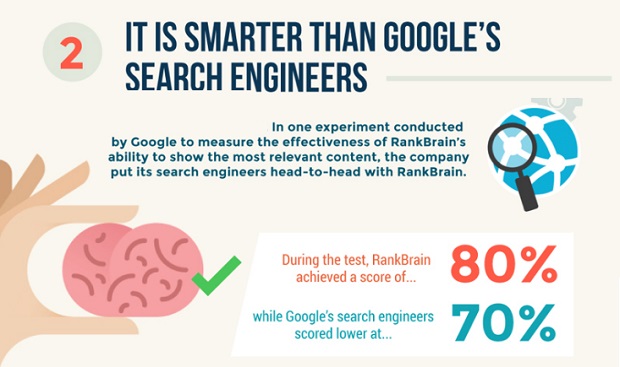

Google Rankbrain Update- 2015

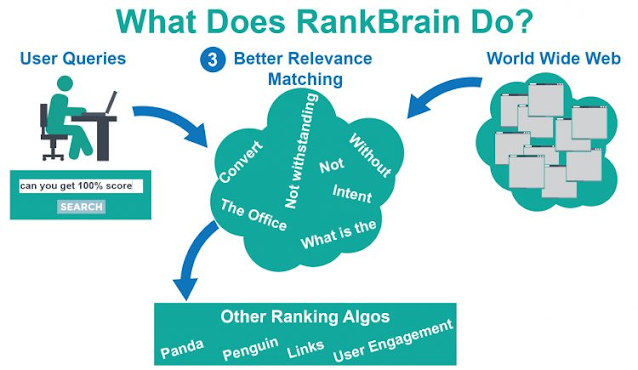

RankBrain is a machine-learning algorithm that Google uses to sort search results. Depending on the keyword, RankBrain will increase or decrease the importance of backlinks, content length, content freshness, domain authority, etc.

- Signals like user location, content freshness are taken into account to interpret intend and deliver the results most likely to satisfy searchers.

- Pre RankBrain Google utilized its basic algorithm to determine the results that are to be shown for a query.

- Post-RankBrain, its believed that the query goes through an "Interpretation Model" that can apply possible factors like location of the users, personalization, and the words of the query to determine the true intent of the users, and thus delivering more relevant results.

Importance of RankBrain: Let's consider an example to get an idea about the importance of RankBrain.

When a user searches for "Olympics location", Google "tries" to know the intention of the user. Imagine that the Olympics just concluded in Russia and the official website of the Olympics earned millions of links for its content about the past event.

If your algorithm is simplistic, it may show results about the Sochi Games, because they have earned the most links.

This is where RankBrain emerges. It's only by mathematically calculating based on the patterns, the machine learning algorithm "notice" in searcher behavior that, the users searching for "Olympics Location" is looking for the location of the upcoming Games. In this case, Google will come up with links of locations of the Olympics to be held in upcoming years.

Google Pigeon Update- 2014

Pigeon Update helps to provide more useful, relevant, and local search results to the users.

Google stated that this update improves their distance and location ranking parameters to provide local, relevant results to the user based on proximity.

This update was cited as the most impactful update after Google Venice update in 2012.

To increase the local ranking, webmasters should get their sites verified on Google My Business mainly using a letter, or by OTP or phone call.

Adding the local keyword in their homepage, adding the details of their websites on local directories, providing an embedded map on the homepage, getting interactions from local users through social media also helps in increasing the ranking of the website locally.

Parked Domain Update

This update was made to fight against the appearance of parked domain websites in the google search results page. Parked Domain sites are placeholder sites that are seldom useful and often filled with ads.

Parked Domain sites have no valuable content for users, so Google prefers not to show them in SERPs.

EMD or Exact Match Domain Update- 2012

Google targeted Exact match domain names in this update. However, the intention behind this was not to target exact match domain names exclusively but to target sites with the combination of spammy tactics and exact match domains with poor quality sites with thin content.

Pirate Update - 2012

The pirate update was introduced as a filter to prevent sites with many copyright infringement reports, as filed through Google's DMCA system from ranking well in search listings. Demoting sites in the results that have a large number of valid copyright removal notices.

Mobilegeddon Algorithm- 2015

On April 25, Google released a significant new mobile-friendly algorithm to give a boost to mobile-friendly pages in Google's mobile search results.

Searchers could easily find more mobile-friendly high quality and relevant results without tapping or zooming, tap targets spaced appropriately, and the page avoids unplayable content and horizontal scrolling.

While the mobile-friendly change is important, a variety of signals were used to rank the websites. Even if a page with high-quality content is not mobile-friendly, it could still rank high if it has great content for the query.